What happened?

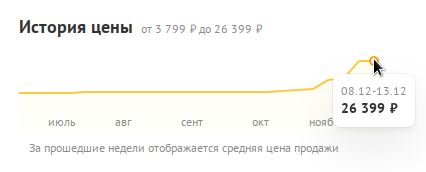

The explosive rise in prices for RAM modules for PCs was triggered by the news that OpenAI had reserved 40% of the world's memory chip production capacity for its data centers (DDCs). Other developers of large language models (LLM), fearing a shortage, followed suit and began urgently concluding contracts for the supply of chips. This increased pressure on an already strained market.

A sharp surge in demand instantly inflated prices for both server and consumer memory. Retail buyers, forced to compete for supplies with large corporate customers, cannot offer comparable margins. As a result, manufacturers are increasingly preferring to leave the retail market for the more profitable data center segment. For example, Micron, one of the leading memory chip manufacturers, announced the termination of the development of its own Crucial consumer brand and the cessation of deliveries of memory modules under this brand to the retail market. This decision only exacerbated the panic among PC users.

Conspiracy theories have begun to spread among gamers and PC enthusiasts: supposedly, component manufacturers have conspired to "drive" players into cloud services, "hook" them on eternal subscriptions and deprive them of the right to fully own games.

We do not undertake to assert whether such a conspiracy exists in reality, but we suggest taking a sober look at the situation. Although, of course, we will first put on a tinfoil hat - just in case.

Why is memory getting more expensive

For many years, the RAM market remained predictable. Of course, prices gradually increased from year to year, but this growth was never avalanche-like. Memory generations changed smoothly, and the distribution of chip types across segments was stable: the most productive memory went to video cards, some to mobile devices, and the rest to servers and personal computers. At the same time, PCs traditionally accounted for the simplest and most undemanding chips.

Even despite the high frequencies of DDR5, its chips are still easier to manufacture, less demanding on stability and reliability compared to memory for servers or video cards. Moreover, a significant part of user PCs still runs on DDR4 - and some even on DDR3. At the same time, the transition from DDR4 to DDR5 practically does not give a noticeable increase in performance in typical tasks, whether it is office applications, web surfing or even most games.

Meanwhile, such an upgrade often requires replacing not only RAM, but also the motherboard, processor - and sometimes other components. In fact, updating the memory leads to the replacement of about half of the key components of the PC, both in quantity and in total cost. In such circumstances, selling even expensive DDR5 memory to an enthusiast is not an easy task. Until recently, many users simply postponed the upgrade, not seeing any practical sense in it: the increase in performance in everyday tasks and even in most games remained minimal.

Now let's look at the situation from the point of view of manufacturers. The RAM market has long been an oligopoly: the vast majority of production and a significant part of sales are concentrated in the hands of only a few global players - primarily Samsung, Micron and SK Hynix. They are not just suppliers, but developers and owners of factories where memory chips are produced.

It is important to understand: we are not talking about ready-made RAM modules, which are assembled by dozens of companies under their own brands (Corsair, Kingston, G.Skill, AData and others), but about manufacturers of basic chips - those crystals without which no module can work. It is Samsung, Micron and SK Hynix that determine how many chips will be produced, what type and for what segment.

There are also smaller players - mainly Chinese, such as YMTC or CXMT - but their share is still insignificant, and their impact on the global market is minimal. In fact, almost every memory chip in your PC, smartphone or game console is a product of one of the three giants mentioned.

And now one of them - Micron - has announced its exit from the consumer segment, completely curtailing the Crucial brand. Why?

The answer lies in the demand structure. The most advanced and expensive memory is required to operate modern large language models (LLM) - HBM (High Bandwidth Memory). These chips are soldered directly onto the boards of AI and graphics accelerators: the larger the volume and the higher the bandwidth, the faster the training and operation of the models.

At the same time, HBM chips are significantly more expensive and difficult to manufacture than even DDR5 - not to mention DDR4. However, the production lines at the factories are the same. This means that every DDR5 chip produced on such a line is a lost profit: HBM could be produced on the same equipment, bringing in many times more profit.

Of course, it is impossible to completely abandon DDR5 - it is still needed by servers in data centers, especially those that operate as AI infrastructure. But here, too, the consumer market loses: corporations investing billions in AI are willing to pay significantly more for memory than private users.

Thus, the choice of manufacturers is predetermined: the server segment, especially related to AI, is much more profitable and stable. If a player needs fast memory for a PC, let him pay. After all, he is already paying for top-end video cards, originally designed not for games, but for accelerating AI calculations.

It is not surprising that more and more production capacity is being redirected towards data centers.

Thus, the "explosion" in RAM prices is not due to speculation or conspiracies, but to the cold calculation of big business: when one segment brings in many times more profit with less risk, it is logical to redistribute resources in its favor - even if it leaves ordinary users with outdated memory and empty shelves in stores.

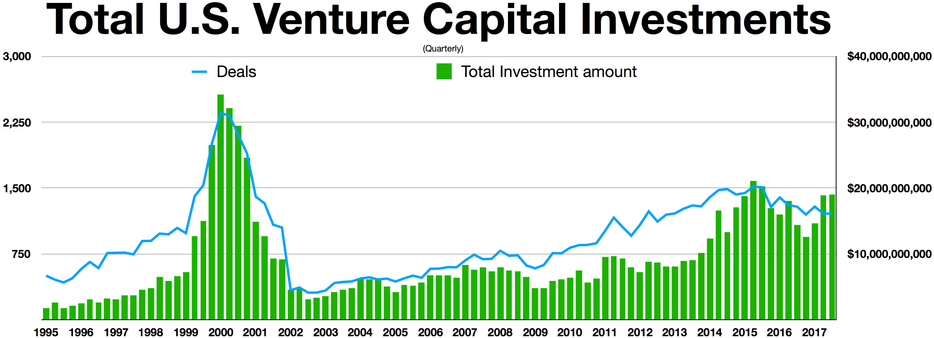

However, another, more alarming question arises. It is increasingly being said that the AI market is an inflating speculative bubble that will burst sooner or later, taking many companies with it, as happened in the era of "dot-coms." Then the hype around everything related to the Internet and the.com domain created the illusion of endless growth - until reality brought investors back to earth.

Today, AI is causing similar fanaticism: every startup, every corporation, every chip - everything must be "with AI." But if the demand turns out to be far-fetched, and the commercial return is illusory, then a sharp drop in investment may lead to a large-scale reduction in orders for memory. And this, too, does not bode well for players. By that time, super-profits may have already been "eaten up", and manufacturers will not have the funds for development and development.

Is AI a bubble?

Yes, it's a bubble. But - and this is fundamental - it is very different from the era of "dot-coms."

In the late 1990s, the presence of a website in the.com domain was automatically considered a sign of "progressiveness," even if the site was empty. Today, artificial intelligence really brings tangible benefits: withcompetent implementation, it is able to increase labor productivity many times over. For now, let's leave aside the cost of infrastructure, support and ownership of AI - let's focus on the essence.

However, as in those days, the mere fact of "having AI" is not enough. The technology only works when they know how to use it - and understand what it is needed for. Already today, stories are increasingly appearing in professional circles and on the Internet - of varying degrees of reliability, but with alarming regularity - that AI itself does not increase efficiency. Moreover, in some cases, it reduces it.

Developers, carried away by AI assistants, are increasingly ceasing to think. They do not design architecture, do not think through logic - they copy-paste what the LLM has generated. And the models, in turn, often produce garbage code: formally executable, but suboptimal, poorly readable, difficult to maintain and potentially vulnerable.

The "office plankton" - as it is ironically called - does not understand why it needs AI at all. At best, he uses it to generate greeting cards, template letters to the authorities or banal reports. Such tasks give the company almost nothing economically: they do not create value and do not reduce real costs. People working with their hands, in factories, factories, construction sites may not even encounter AI, or even computers. And AI will not be able to replace them in the near future.

But in technology companies, the picture is different. There, AI is used meaningfully: they take publicly available models or develop their own, retrain them on their data to solve narrow but important tasks - for example, deciphering satellite images, automatically sorting medical images, or generating references for designers. In such cases, AI does not replace a person, but enhances it: an artist, engineer or analyst gets a tool that saves hours of routine work.

This is what distinguishes the current AI boom from the era of "dot-coms": there is potential for real growth here - and it is already being realized, although so far as an exception, not a rule.

Nevertheless, AI is being implemented everywhere - especially in public companies. Why? Because if an organization does not declare its "AI transformation," it is instantly written down as a "dinosaur": it has no future, it is not worth investing in. As a result, many companies are implementing AI not because they need it, but because "it is necessary" - so as not to look outdated against the background of competitors.

And in this - in the blind, fashionable, ill-considered following of the trend - the similarity with the era of "dot-coms" becomes striking.

So yes: the bubble is inflating. But unlike 2000, inside it - not just air, but a technology with real, measurable value. The only question is how many AI companies will survive until that value ceases to be a marketing slogan and becomes a real result. And those who survive the bursting of the bubble will get the whole market - and will dictate their terms.

In such a struggle, all means are good. Even if it means contracting 40% of the world's RAM production capacity. Not because OpenAI needs so much itself. But because this memory is critical to its competitors - startups and corporations that are also developing AI. Deprive them of resources - and they will leave the race.

And while there is access to "free" investor money, you can afford to play the long game. Debts? Maybe they will be returned after everyone else drowns. But if OpenAI itself drowns, there will be no one to return them to.

Physics is a heartless science

Technological progress brings not only opportunities, but also strict physical limitations. Data centers (DDCs) - especially those that serve AI - devour colossal amounts of electricity. In countries already experiencing energy shortages, this inevitably leads to an increase in electricity tariffs.

But there is also another, less obvious, but no less acute resource issue: fresh water. Yes, it is water - and a lot of it - that is required to cool server racks. And if energy can be "green" or "yellow" (nuclear), then there is a finite amount of fresh water in the world. You can't replace it with solar panels.

And data centers and ordinary consumers are also fighting for these resources.

In Russia, with energy resources and fresh water reserves, it would seem, we are lucky. But even in our country, mining is already prohibited in some regions: the load on local power grids is becoming critical. And what awaits the country when giant data centers for AI begin to be built throughout the territory - not for cryptocurrencies, but for models that require many times more computing power?

And it's not just about AI. In one of our previous articles about the betrayal of gamersby Nvidia, we wrote: sooner or later we will be connected to the Matrix - that is, forced to switch to cloud gaming. The reason is simple - the modern household power grid is already working at its limit. Top-end gaming PCs today consume as much as powerful household appliances - electric stoves, boilers, air conditioners. Today, there are less than 1% of such "pieces of iron", according to Steam statistics, but it will inevitably grow.

If your apartment is allocated, say, 5 kW, and your video card with a processor consumes more than 1 kW at peak load (and in reality - even more), then will you be comfortable playing, cooking dinner and turning on the washing machine at the same time? Want more power? Please - but pay the power supply company for connecting additional kilowatts. And it is not a fact that the network in your house will technically be able to withstand this.

What does all this mean for PC gamers?

Prices will rise.

It doesn't matter whether the AI bubble bursts or not. The construction of data centers will continue - and not only for the sake of AI, but also for the sake of cloud services, streaming gaming, metaverses and other "digital utopias."

And given the growing geopolitical and technological segmentation of the world, there will no longer be global, common data centers with optimized costs. Each leading power strives to create its own AI models, located in its own data centers, so as not to depend on someone else's infrastructure and sanctions. When the presidents of all countries in every other speech emphasize: "We need a national AI!" - this is a signal: resources will go there, and no one will take into account the interests of end users.

Gamers have already resigned themselves to the fact that top-end video cards cost as much as a used car. Now they will have to come to terms with the explosive growth in RAM prices. And tomorrow - for motherboards, power supplies, even for SSDs. After all, data centers consume not only HBM and DDR5, but also processors, power supplies, cooling, server chassis - everything that is made in the same factories as components for PCs.

Physics is really heartless: it doesn't ask if you want to play locally or live in the "cloud." It simply dictates: resources are finite, and demand is growing. And in this race, not those who love games will survive, but those who pay more.

Is it worth running and buying memory modules now, while they are "still available"? Cool down. New memory itself - even the fastest DDR5 with extreme timings - will not make you happier. If your current PC copes with the games you play, if you do not encounter bottlenecks, do not suffer from low FPS and do not wait for loading for half an hour - do not run ahead of the locomotive. Technologies are not waiting, but money should not fly away into nowhere just because of the fear of "missing the moment."

You can always spend money. But you will never get it back. Instead of panic purchases, it is better to ask yourself a few simple questions:

- Do I really need more RAM - or am I reacting to the noise on social networks?

- Or maybe it's worth reconsidering priorities altogether: instead of chasing after hardware - buy a few good games that the current system will be enough for?

Remember: hardware "happiness" is always temporary. Today you are delighted with 64 GB of memory, tomorrow - with a new video card, the day after tomorrow - with water cooling. And the games that you really wanted to play will remain in the library, unlaunched. Or unpurchased, because you invested in an upgrade.

What does all this mean for console gamers?

Prices will rise.

At first glance, the game console market seems to be a separate universe: it does not depend directly on the retail market for PC components. Users do not choose how many gigabytes of RAM to install in PlayStation or Xbox - this is decided by Sony and Microsoft engineers.

But memory is still needed. And the more there is, the higher the performance, texture detail, resolution and the better the overall picture. And here's the difference: if a retail PC buyer today is a small fish in the ocean of data centers and AI startups, then Sony and Microsoft are giants with multi-billion dollar budgets. They have the resources to conclude long-term contracts for the supply of chips even in conditions of scarcity. The problem is not availability, but price.

Most likely, the cost of memory will increase - and this markup will fall on the end consumer. The price of the new generation of consoles will be higher not because of the "greed of corporations", but because of the objective increase in the cost of components.

According to rumors, Microsoft plans to release a premium device in the next generation - powerful, but not cheap. And, apparently, it has just become even more expensive. Sony is expected to release a "middle class" console. But by the time of release, the concept of "middle class" may radically change: performance - average by the standards of the new generation, and the price - the one that is considered top-end today.

The irony is that it is the very strategy of Microsoft, for which Phil Spencer - the head of Xbox - has been criticized in recent years, may suddenly turn out to be brilliant. We are talking about the slogan "This is an Xbox", which is now "stuck" on everything that is capable of running games from Microsoft or streaming them from the cloud: portable consoles like ROG XBOX Ally with a separate shell, smartphones with a Game Pass Ultimate subscription, even smart TVs with Xbox Cloud Gaming.

Spencer can pick up any such gadget and say: "This is Xbox!" - and in some ways he will be right. And, suddenly, Microsoft, from a company that is able to "screw up all the polymers" out of the blue, will become the market leader and visionary. Doubtful, of course, because it's Microsoft. If it wasn't Microsoft and Phil Spencer, there would be more trust. But who knows?

If you are not a PC gamer and are willing to put up with the peculiarities of console gaming - restrictions in settings, lack of mods, dependence on the ecosystem, paid multiplayer - then now you should definitely not postpone buying a PlayStation or Xbox. Prices may rise, and new models, as we have already discussed, are unlikely to be cheaper. If the budget allows - buy now and play. You will get a stable, debugged platform that is guaranteed to work for several more years without having to monitor memory shortages or SSD price jumps.

And if you already own a console of the current generation - worry less. Unlike the PC segment, where each component can be updated separately, consoles are designed for a fixed life cycle. Sony and Microsoft will optimize games for your "hardware" until the very end of the generation, or even longer.

What does all this mean for smartphone gamers?

Prices will rise.

Large smartphone manufacturers are already warning: the cost of new devices may increase by at least 20%. The reason is the same: the increase in the cost of components, especially memory (both RAM and flash memory), as well as chipsets, displays and even cooling systems - all this is done in the same factories as the components for data centers.

Should I urgently run for a new smartphone?

Here - unlike PCs and consoles - we will not just advise you to think, but recommend that you take a closer look at the current market right now. Why? Because smartphones, especially in the middle and budget segments, have an extremely short life cycle - an average of 2-3 years, and in reality even less. Already a year and a half after the purchase, many devices stop receiving security updates, and after two - they begin to noticeably slow down even in everyday tasks, not to mention games.

If your current smartphone is already "breathing its last" - do not wait until the "ideal" model appears. Most likely, by that time it will be much more expensive, and the increase in performance will be barely noticeable against the background of rising prices.

In addition, manufacturers often discontinue current models, replacing them with new ones, which - due to more expensive components - receive either the same price with reduced equipment (less RAM, slower memory), or the same filling, but at an inflated cost.

Therefore, if you have already planned an upgrade - now is a good time. See which models are currently on sale, compare real characteristics, not marketing slogans. Pay special attention to the amount of RAM (at least 8-12 GB for comfortable gaming) and the amount of storage. Several major (in every sense) new products are coming out on the mobile gaming market next year. And if you don't have a flagship or sub-flagship in your hands now, and if your wallet allows you to please yourself for the New Year, take a look at a new smartphone.

The situation is unlikely to improve in the coming years: the demand for memory from the AI infrastructure will not disappear, and competition for resources will only intensify.

Analysis

Don't be like the numerous "hysterical squirrels." This is not the first surge in prices for PC components - and certainly not the first in Russian history. Remember the devaluation of 1998, the mining boom, due to which even somewhat productive video cards disappeared from the shelves, or the recent global shortage of semiconductors, which disrupted supply chains around the world. There will always be both negative and positive factors pushing component prices up and down.

However, now we are once again forced to state the obvious: you, gamer, are no longer a priority for manufacturers of video cards, memory modules and other components - for PCs or for phones. And, perhaps, it's time to simply accept this new paradigm - instead of being angry at it.

Your relationship with game developers is much more important. It is they who should adapt to your device and your preferences. If you don't have money for another upgrade, a new console or a flagship smartphone - it is they who are obliged to offer you games that you can still play. After all, it is you who pay them for the product, and not vice versa. Always keep this in mind!