Modern technologies were used to develop clone games.

Stepan Parunashvili, co-founder and CTO of InstantDB, decided to conduct an experiment. He looked at what might happen if powerful AI models tried to create a game.

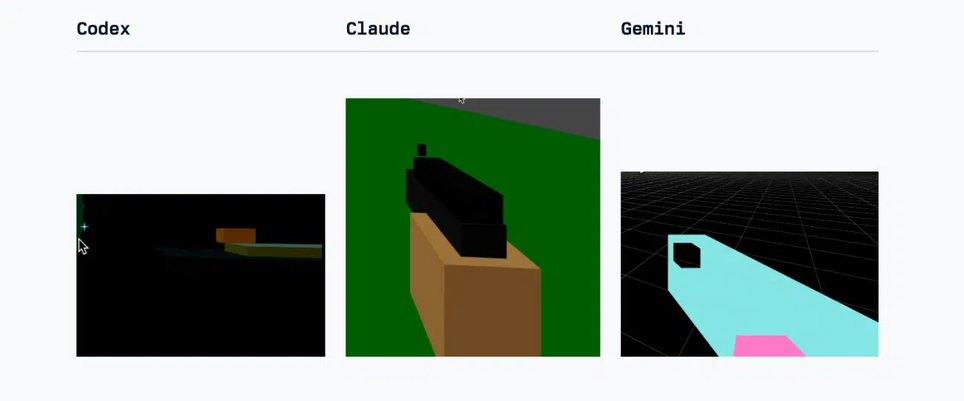

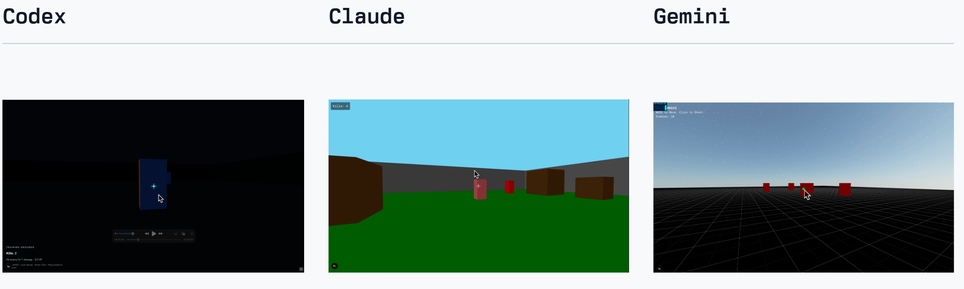

With the help of GPT-5.1 Codex Max, Gemini 3 Pro, and Claude Opus 4.5, Counter-Strike clones were developed.

The followingrequirements were set:

- a basic version of Counter Strike needs to be developed

- the game should run in a browser and be three-dimensional;

- this is a multiplayer project and everything should be done by the AI model itself (without patches from a human, without writing code manually)

Each model was given about seven consecutive requests, divided into two categories:

- Frontend: "first, the agents needed to focus only on the game mechanics - design the scene, opponents, shooting logic, and add sound effects"

- Backend: "after that, the agents had to make the game multiplayer - implement the selection of game rooms, the ability to join them, and start the battle."

Claude Opus 4.5coped with ambiguous engineering tasks best of all. Codex-Max remained cautious even during long debugging cycles. Gemini excelled in logical reasoning tasks where long context had to be taken into account and strict logic was required.

The engineer admitted that AI models have improved, but "the promise that you will never have to look at the code does not seem 'quite real' yet."

Examples of models' work:

A table of results was compiled, and "medals" were awarded for different frontend and backend parameters:

- Claude Opus 4.5 won in the "frontend" - it created the best maps and the best models.

- Gemini 3 Pro won in the "backend" category - it coped with the task in one go.

- Codex most often took "second place", it "was good, but not outstanding in either frontend or backend."

Counter-Strike 2

Boris Piletskiy

03 Dec 2025 21:00

Sources:

Официальный YouTube-канал InstantDB